Indirect measurement transforms abstract challenges into quantifiable insights by leveraging relationships, models, and hidden assumptions that shape every estimation we make daily.

🔍 The Foundation of Indirect Measurement in Modern Science

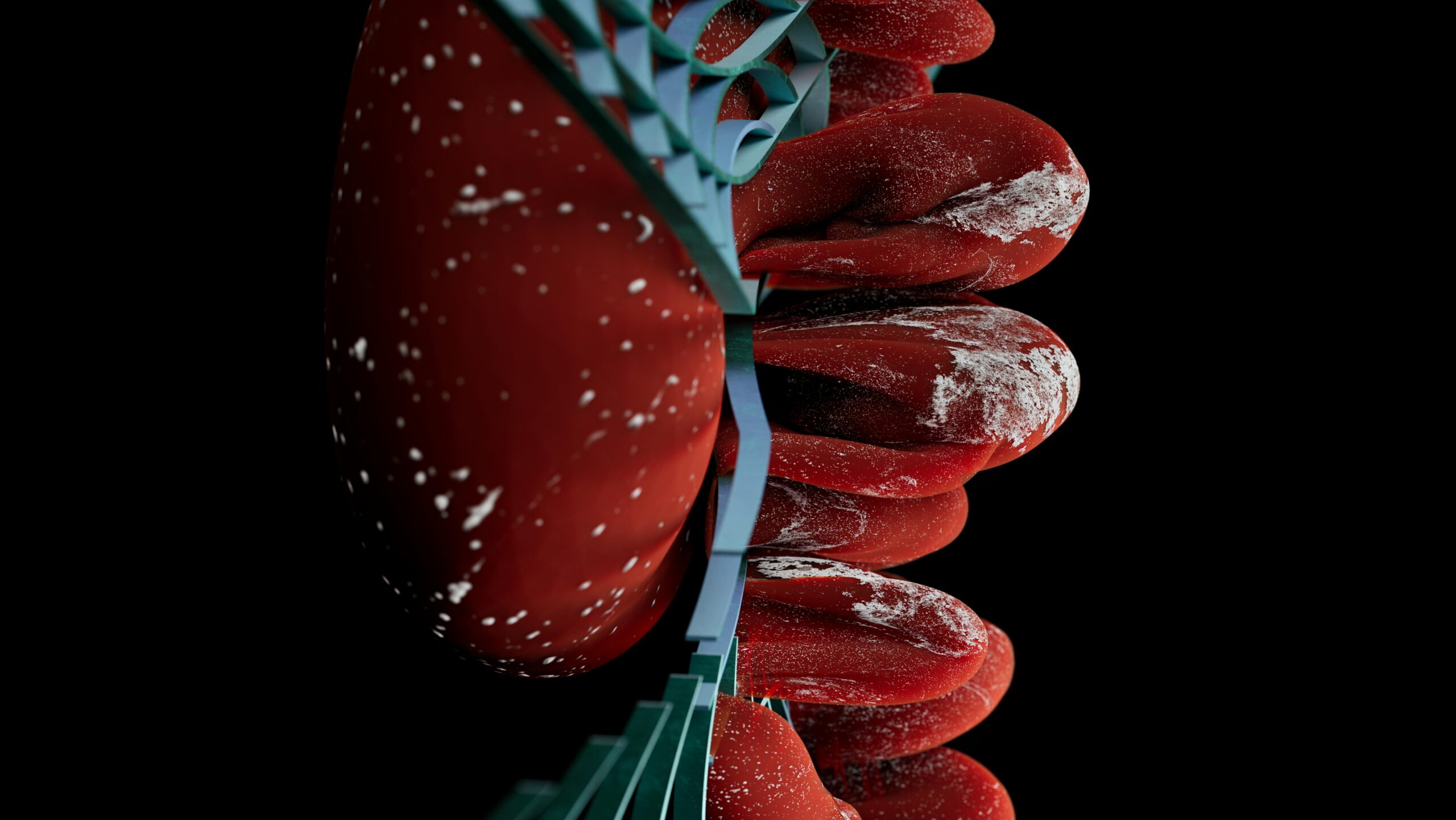

When we cannot measure something directly, we turn to indirect measurement—a sophisticated process that relies on observable proxies and mathematical relationships. This approach powers everything from calculating the height of mountains to estimating the distance between stars. Yet beneath every indirect measurement lies a web of assumptions that can either strengthen or compromise our results.

Understanding these hidden assumptions is crucial for anyone working with data, from engineers and scientists to business analysts and researchers. The accuracy of your estimations depends not just on your calculations, but on recognizing what you’re taking for granted in your measurement approach.

🎯 Understanding the Core Principles Behind Indirect Estimation

Indirect measurement operates on a fundamental principle: if we know the relationship between two variables, measuring one allows us to calculate the other. This sounds simple, but the complexity emerges when we examine the assumptions embedded in those relationships.

The Proxy Variable Challenge

Every indirect measurement uses proxy variables—observable quantities that stand in for what we actually want to measure. When astronomers measure stellar distances using parallax, they assume the Earth’s orbital radius is accurately known. When engineers use sound to measure ocean depth, they assume water density and temperature profiles.

These proxy relationships carry inherent assumptions about stability, linearity, and causation. Recognizing these assumptions transforms measurement from a mechanical process into a thoughtful practice that accounts for uncertainty and contextual factors.

📊 The Mathematical Models That Shape Our Measurements

Mathematical models form the bridge between observable data and target quantities. These models encode relationships, but they also encode assumptions about how the world works. A linear model assumes proportional relationships. An exponential model assumes constant growth rates. Each choice carries implications for measurement accuracy.

Calibration: Where Assumptions Meet Reality

Calibration represents the critical process of aligning our models with real-world behavior. When we calibrate a thermometer, we’re not just setting reference points—we’re validating the assumption that temperature changes produce consistent, predictable effects on mercury expansion or electrical resistance.

The calibration process reveals hidden assumptions. Does your model hold across the entire measurement range? Are environmental factors affecting the relationship? Does the proxy variable remain stable over time? These questions separate robust measurements from fragile ones.

🌡️ Temperature and Heat: A Case Study in Hidden Assumptions

Temperature measurement perfectly illustrates indirect measurement’s complexity. We cannot directly observe temperature—instead, we measure effects like thermal expansion, electrical resistance changes, or infrared radiation emission. Each method carries distinct assumptions.

Mercury thermometers assume uniform expansion rates and consistent glass properties. Resistance thermometers assume stable material characteristics and negligible self-heating effects. Infrared thermometers assume known emissivity values and transparent atmospheric conditions.

When these assumptions fail, measurements diverge from reality. A mercury thermometer in rapid temperature changes lags due to thermal mass. An infrared thermometer aimed at shiny metal reads incorrectly due to low emissivity. Understanding these limitations prevents measurement errors.

🏔️ Distance Measurement: From Triangulation to Cosmic Scales

Distance measurement showcases how assumptions stack as we extend our reach. Measuring a building’s height with trigonometry assumes level ground and accurate angle measurement. Measuring mountain heights from sea level assumes consistent gravitational fields and well-defined reference frames.

The Cosmic Distance Ladder

Astronomers face the ultimate indirect measurement challenge: measuring cosmic distances. They’ve built a “distance ladder” where each rung depends on the previous one, accumulating assumptions at each level.

- Parallax measurements assume Earth’s orbital parameters are precise

- Standard candle methods assume uniform intrinsic brightness for certain stellar objects

- Redshift calculations assume the cosmological principle and specific universal expansion models

- Each technique’s validity range overlaps with the next, creating interdependencies

This cascading dependency means errors and invalid assumptions amplify as we measure farther distances. The careful validation of each assumption becomes paramount for maintaining measurement integrity across cosmic scales.

⚖️ Weight and Mass: Untangling Gravity’s Influence

We routinely confuse weight and mass, yet this distinction reveals crucial assumptions in indirect measurement. Bathroom scales measure weight—the gravitational force on your body—but report mass, assuming standard gravitational acceleration.

This assumption works well for most purposes, but fails in precision contexts. Your scale reading would differ at sea level versus mountain summit, at the equator versus the poles, or on the Moon versus Earth. The indirect measurement from weight to mass assumes constant gravity.

Inertial Versus Gravitational Mass

Physics distinguishes between inertial mass (resistance to acceleration) and gravitational mass (response to gravity). Their equivalence is an assumption—albeit an extraordinarily well-tested one. This assumption underlies every measurement that converts between force and mass.

🔬 Particle Physics: When Direct Observation Becomes Impossible

Particle physics pushes indirect measurement to extreme limits. We cannot directly observe subatomic particles—instead, we measure their effects on detectors and reconstruct their properties from interaction patterns.

When physicists discovered the Higgs boson, they didn’t see the particle itself. They observed decay products, applied conservation laws, and used statistical analysis to infer the Higgs’s existence and properties. This process assumes the Standard Model’s validity, detector accuracy, and specific particle interaction mechanisms.

💼 Business Metrics: The Assumptions Behind KPIs

Business analysts constantly employ indirect measurement, often without recognizing the embedded assumptions. Customer satisfaction scores assume survey responses reflect true sentiment. Market share calculations assume accurate industry data. Revenue projections assume historical patterns continue.

The Net Promoter Score Example

The Net Promoter Score (NPS) asks customers one question: “How likely are you to recommend us?” This simple metric assumes recommendation likelihood predicts actual business outcomes, that customers accurately forecast their own behavior, and that the 0-10 scale captures meaningful distinctions.

These assumptions hold reasonably well in many contexts but break down in others. Cultural differences affect how people use rating scales. Survey timing influences responses. The metric cannot capture why scores change. Recognizing these limitations prevents over-reliance on any single measurement.

🌊 Environmental Measurement: Sampling and Inference

Environmental scientists face unique indirect measurement challenges. How do you measure ocean temperature when you can only sample specific locations? How do you estimate air quality across a city with limited monitoring stations?

These measurements assume spatial and temporal representativeness—that samples reflect broader conditions. They assume measurement stations aren’t affected by local anomalies. They assume interpolation methods accurately estimate unmeasured areas.

Climate Data and Historical Reconstruction

Climate scientists reconstruct historical temperatures using proxy data: tree rings, ice cores, coral growth patterns, and sediment layers. Each proxy assumes specific relationships between the measurable characteristic and historical temperature.

Tree ring width assumes temperature was the limiting growth factor, not water or nutrients. Ice core oxygen isotope ratios assume consistent precipitation patterns. Coral growth assumes stable ocean chemistry. Validating these assumptions across different proxies provides confidence in reconstructed temperatures.

🧮 Statistical Assumptions: The Hidden Framework

Statistical methods provide the mathematical foundation for many indirect measurements, but they introduce their own assumptions. Regression analysis assumes linear relationships and independent errors. Confidence intervals assume specific probability distributions. Hypothesis tests assume random sampling.

The Central Limit Theorem’s Role

Many indirect measurements rely on the Central Limit Theorem—the principle that averages of random samples approximate normal distributions. This powerful theorem enables countless statistical techniques, but it assumes independent observations and sufficient sample sizes.

When these assumptions fail, statistical inferences become unreliable. Correlated measurements violate independence. Small samples may not converge to normality. Outliers can dominate averages. Robust indirect measurement requires verifying statistical assumptions, not just applying formulas.

🎓 Educational Applications: Teaching Measurement Literacy

Educators face the challenge of teaching students not just how to measure, but how to think critically about measurement. This requires making hidden assumptions explicit and developing intuition about when measurements can be trusted.

Effective measurement education moves beyond formulas to explore uncertainty, bias, and contextual factors. Students learn to ask: What are we assuming? How could these assumptions fail? What evidence supports their validity? How sensitive are results to assumption violations?

🛠️ Practical Strategies for Unveiling Hidden Assumptions

Mastering indirect measurement requires systematic approaches to identifying and validating assumptions. These strategies apply across disciplines and measurement contexts.

The Assumption Audit Process

Begin by explicitly listing every assumption underlying your measurement approach. Consider physical assumptions (constant density, linear behavior), statistical assumptions (independence, normality), and contextual assumptions (stable conditions, representative samples).

For each assumption, assess its validity in your specific context. What evidence supports it? Under what conditions might it fail? How sensitive are your results to violations? This audit process transforms implicit assumptions into explicit, testable hypotheses.

Sensitivity Analysis and Uncertainty Quantification

Sensitivity analysis reveals how measurement results change when assumptions vary. If small assumption changes produce large result changes, your measurement is fragile and requires careful validation. If results remain stable across reasonable assumption ranges, your measurement is robust.

Uncertainty quantification goes further, estimating measurement error from all sources including assumption violations. This provides realistic confidence bounds that account for systematic biases, not just random errors.

🔄 Cross-Validation Through Multiple Methods

Using multiple independent measurement methods provides powerful validation. When different approaches with different assumptions yield consistent results, confidence increases. When they diverge, assumption violations likely exist somewhere.

Astronomers use this approach extensively, measuring distances through parallax, spectroscopic methods, and standard candles. Agreement between methods validates assumptions. Disagreement triggers investigation into which assumptions failed and why.

🚀 Emerging Technologies and New Measurement Paradigms

Modern technology enables measurement approaches that were previously impossible, but they introduce new assumptions. Machine learning models make predictions based on training data patterns, assuming those patterns generalize to new situations. Sensor networks provide dense spatial coverage, assuming sensors remain calibrated and representative.

The Machine Learning Measurement Challenge

Machine learning increasingly powers indirect measurements, from medical diagnosis to financial forecasting. These models learn complex relationships from data, but they embed assumptions about data quality, feature relevance, and model architecture.

Unlike traditional models with explicit equations, machine learning models contain implicit assumptions that are difficult to audit. This “black box” nature creates new challenges for validating measurement assumptions and understanding failure modes.

🎯 Building Measurement Wisdom: Beyond Technical Skills

True mastery of indirect measurement transcends technical knowledge to encompass judgment, critical thinking, and contextual awareness. It requires recognizing that all measurements are models of reality, not reality itself, and that every model makes simplifying assumptions.

The wisest practitioners maintain healthy skepticism about their own measurements while remaining confident enough to act on them. They document assumptions explicitly, communicate uncertainties honestly, and continuously update their understanding as new evidence emerges.

🌟 The Path Forward: Cultivating Assumption Awareness

Developing expertise in indirect measurement is an ongoing journey. As measurement contexts become more complex and stakes increase, the ability to identify and validate hidden assumptions becomes increasingly valuable.

Start by examining familiar measurements with fresh eyes. What assumptions underlie your bathroom scale, your car’s speedometer, your fitness tracker? How could those assumptions fail? What would you observe if they did?

This practice builds intuition that transfers to professional contexts where measurement accuracy matters for decisions, safety, or scientific progress. The habit of questioning assumptions, validating models, and quantifying uncertainty separates adequate measurement from excellent measurement.

By unveiling the hidden assumptions behind accurate estimations, we transform indirect measurement from a mechanical process into an intellectual discipline. We recognize that measurement quality depends not just on instruments and calculations, but on the careful reasoning that connects observable proxies to the quantities we actually care about.

The next time you encounter an indirect measurement—whether in scientific literature, business reports, or everyday life—pause to consider the invisible assumptions supporting it. This awareness is the foundation of measurement mastery and the key to making accurate estimations in an uncertain world. 📏✨

Toni Santos is a health systems analyst and methodological researcher specializing in the study of diagnostic precision, evidence synthesis protocols, and the structural delays embedded in public health infrastructure. Through an interdisciplinary and data-focused lens, Toni investigates how scientific evidence is measured, interpreted, and translated into policy — across institutions, funding cycles, and consensus-building processes. His work is grounded in a fascination with measurement not only as technical capacity, but as carriers of hidden assumptions. From unvalidated diagnostic thresholds to consensus gaps and resource allocation bias, Toni uncovers the structural and systemic barriers through which evidence struggles to influence health outcomes at scale. With a background in epidemiological methods and health policy analysis, Toni blends quantitative critique with institutional research to reveal how uncertainty is managed, consensus is delayed, and funding priorities encode scientific direction. As the creative mind behind Trivexono, Toni curates methodological analyses, evidence synthesis critiques, and policy interpretations that illuminate the systemic tensions between research production, medical agreement, and public health implementation. His work is a tribute to: The invisible constraints of Measurement Limitations in Diagnostics The slow mechanisms of Medical Consensus Formation and Delay The structural inertia of Public Health Adoption Delays The directional influence of Research Funding Patterns and Priorities Whether you're a health researcher, policy analyst, or curious observer of how science becomes practice, Toni invites you to explore the hidden mechanisms of evidence translation — one study, one guideline, one decision at a time.