Detection thresholds define the boundaries between signal and noise, shaping how systems perceive, measure, and respond to stimuli in an increasingly complex technological landscape.

🔍 The Foundation of Detection Thresholds in Modern Systems

Every measurement system, whether biological or technological, operates within specific limits that determine its ability to distinguish meaningful signals from background noise. These detection thresholds represent critical parameters that fundamentally influence accuracy, reliability, and efficiency across countless applications. From medical diagnostics to environmental monitoring, understanding and optimizing these constraints has become essential for developing smarter, more precise solutions.

Detection threshold constraints aren’t merely technical specifications—they represent the gateway between observable and unobservable phenomena. When engineers and scientists push against these boundaries, they unlock new capabilities that transform industries and improve lives. The quest to lower detection limits while maintaining specificity has driven innovation in fields ranging from analytical chemistry to artificial intelligence.

Modern sensor technology, computational power, and algorithmic sophistication have collectively enabled unprecedented sensitivity levels. Yet challenges persist: lower thresholds often introduce false positives, while overly conservative limits may miss critical signals. Striking the optimal balance requires deep understanding of both theoretical principles and practical constraints.

Understanding the Science Behind Detection Limits

At its core, a detection threshold represents the minimum signal intensity that a system can reliably distinguish from background noise. This concept applies universally across measurement domains, though implementation varies dramatically based on context. In analytical chemistry, detection limits might measure parts per billion of a substance. In digital communications, they determine how weak a signal can be while still conveying information reliably.

The signal-to-noise ratio (SNR) serves as the fundamental metric governing detection capabilities. Systems with higher SNR can identify weaker signals more reliably, but achieving superior SNR often requires trade-offs in cost, complexity, or response time. Engineers must carefully evaluate these compromises when designing detection systems for specific applications.

Statistical considerations play an equally important role. Detection thresholds must account for natural variability in both signals and noise. Setting thresholds too low increases false positive rates, potentially overwhelming systems with spurious detections. Conversely, excessively high thresholds result in false negatives, causing systems to miss genuine signals. Probability theory and hypothesis testing provide frameworks for optimizing these decisions.

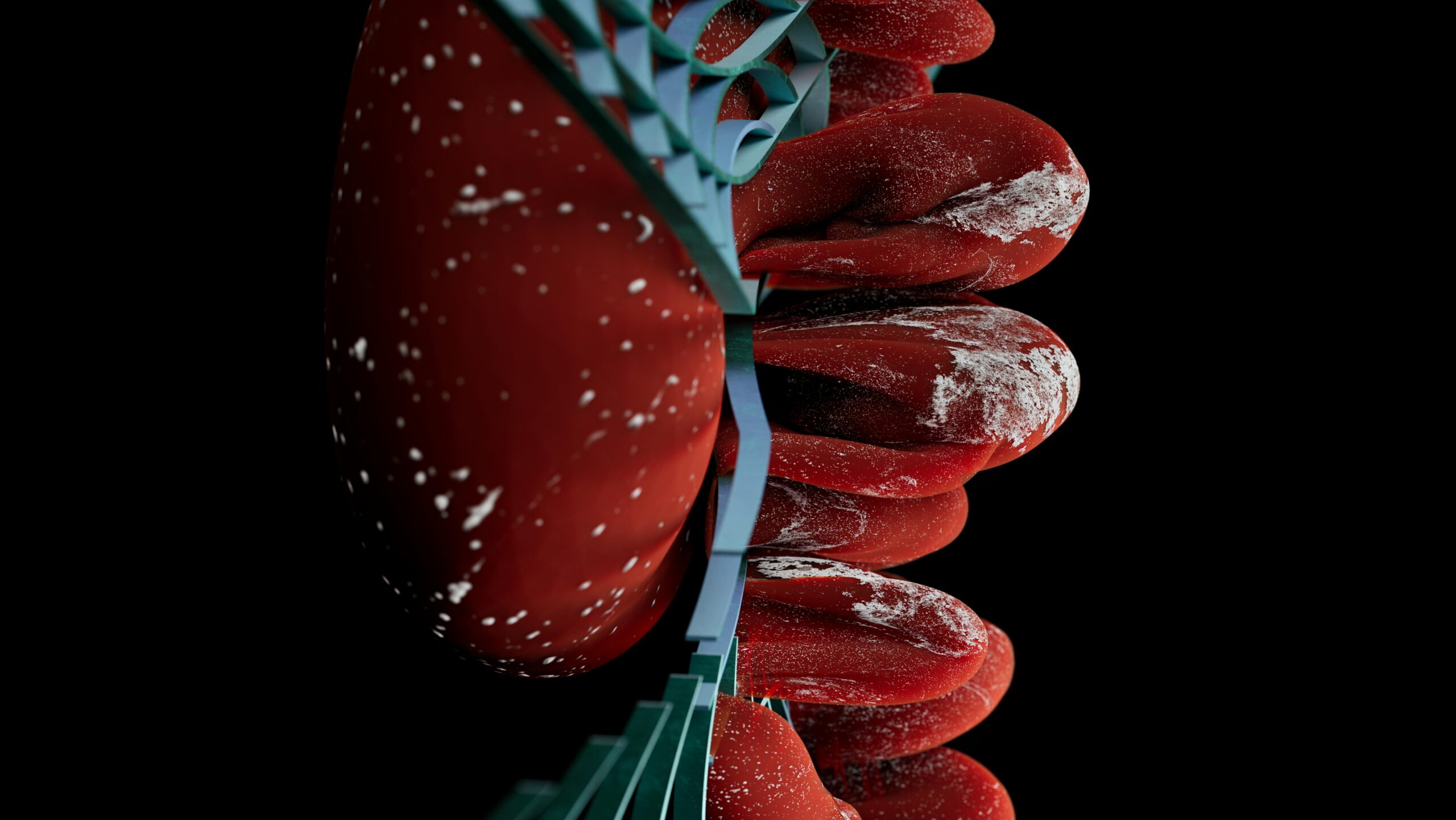

Biological Detection Systems as Natural Benchmarks

Nature has evolved remarkably sophisticated detection mechanisms that continue to inspire technological development. Human sensory systems demonstrate how biological organisms navigate threshold constraints with elegant efficiency. The human eye, for instance, can detect individual photons under optimal conditions, while the olfactory system identifies specific molecules among trillions of others.

These biological systems incorporate adaptive thresholds that adjust based on context and recent exposure history. When transitioning from bright sunlight to dim interiors, our visual system gradually lowers its detection threshold through dark adaptation. This dynamic adjustment optimizes sensitivity without sacrificing reliability—a principle increasingly adopted in technological systems.

🎯 Key Factors Influencing Detection Threshold Performance

Multiple variables interact to determine effective detection thresholds in practical systems. Understanding these factors enables designers to optimize performance for specific applications and operational environments.

Signal Characteristics and Temporal Dynamics

The nature of the target signal profoundly impacts detection capabilities. Transient signals lasting milliseconds require different detection strategies than continuous or slowly varying phenomena. Frequency content, amplitude distribution, and temporal patterns all influence how effectively systems can extract signals from noise.

Integration time represents a crucial parameter in many detection systems. Averaging measurements over longer periods improves SNR by reducing random noise fluctuations, effectively lowering detection thresholds. However, extended integration times sacrifice temporal resolution and may miss rapid changes. Applications requiring real-time response must carefully balance these competing demands.

Environmental and Operational Constraints

Detection systems rarely operate under ideal laboratory conditions. Environmental factors such as temperature fluctuations, electromagnetic interference, mechanical vibration, and chemical contamination all degrade detection performance. Robust system design must anticipate and mitigate these real-world challenges.

Calibration drift presents another persistent challenge. Even well-designed sensors experience gradual changes in sensitivity over time due to component aging, contamination, or environmental exposure. Regular recalibration maintains accuracy, but automated self-calibration mechanisms increasingly enable continuous threshold optimization without manual intervention.

Revolutionary Applications Pushing Detection Boundaries

Advances in detection threshold optimization have catalyzed breakthroughs across diverse fields, fundamentally transforming what’s possible in science, medicine, industry, and everyday life.

Medical Diagnostics and Early Disease Detection 🏥

Perhaps nowhere are improved detection limits more consequential than in medical diagnostics. Ultra-sensitive assays now identify disease biomarkers at concentrations previously considered undetectable, enabling earlier intervention when treatments prove most effective. Liquid biopsy techniques detect circulating tumor DNA from minuscule cancer cell populations, potentially identifying malignancies before symptoms appear.

Point-of-care diagnostic devices bring laboratory-grade sensitivity to resource-limited settings. By lowering detection thresholds while simplifying operation, these technologies democratize access to medical testing. However, clinical implementation requires careful validation to ensure specificity matches sensitivity—false positives in medical screening carry significant psychological and economic costs.

Wearable health monitors exemplify detection threshold optimization in consumer applications. These devices continuously measure physiological parameters, identifying subtle deviations that might indicate developing health issues. Advanced algorithms distinguish meaningful changes from normal variation, personalizing thresholds based on individual baselines rather than population averages.

Environmental Monitoring and Pollution Detection 🌍

Environmental protection increasingly depends on detecting trace contaminants at parts-per-trillion concentrations. Whether monitoring drinking water for toxic compounds, measuring atmospheric pollutants, or detecting agricultural pesticide residues, ultra-low detection limits enable proactive intervention before contamination reaches dangerous levels.

Remote sensing technologies deploy detection systems across vast geographical areas, creating comprehensive monitoring networks. Satellite-based sensors detect methane leaks from infrastructure, monitor ocean health through chlorophyll measurements, and track deforestation with unprecedented precision. These applications demonstrate how improved detection thresholds scale from individual measurements to planetary monitoring.

Industrial Process Control and Quality Assurance

Manufacturing excellence demands rigorous quality control with detection systems identifying defects or variations that might compromise product performance. Semiconductor fabrication, pharmaceutical production, and food processing all rely on ultra-sensitive detection to maintain consistency and safety.

Predictive maintenance represents another industrial application where detection threshold optimization delivers substantial value. By identifying subtle changes in vibration patterns, temperature profiles, or acoustic emissions, systems predict equipment failures before they occur. This capability transforms maintenance from reactive to proactive, reducing downtime and extending equipment lifespan.

🔬 Technological Innovations Redefining Detection Capabilities

Continuous innovation across multiple technology domains drives ongoing improvements in detection threshold performance, enabling capabilities that seemed impossible just years ago.

Nanomaterials and Advanced Sensor Technologies

Nanoscale materials exhibit unique properties that dramatically enhance detection sensitivity. Graphene-based sensors detect individual molecules through changes in electrical conductivity. Quantum dots enable fluorescence-based detection with exceptional brightness and photostability. Gold nanoparticles amplify signals in immunoassays, lowering detection limits by orders of magnitude.

These materials don’t merely improve existing detection methods—they enable entirely new approaches. Surface-enhanced Raman spectroscopy (SERS) using nanostructured substrates identifies molecules through their vibrational signatures with single-molecule sensitivity. Such techniques open possibilities for applications previously limited by insufficient detection capability.

Artificial Intelligence and Machine Learning Integration

Machine learning algorithms have revolutionized how systems distinguish signals from noise. Traditional threshold-based detection applies fixed criteria, while AI-powered approaches learn optimal decision boundaries from training data. These systems adapt to complex, non-linear relationships between variables that humans might never explicitly identify.

Deep learning excels at extracting weak signals from noisy data by identifying subtle patterns across multiple dimensions. Convolutional neural networks analyze images to detect abnormalities invisible to human observers. Recurrent networks process time-series data, identifying emerging trends before they become statistically obvious through conventional analysis.

However, AI-based detection systems introduce unique challenges. Their “black box” nature can make decision processes opaque, complicating validation and regulatory approval. Ensuring robustness against adversarial inputs and avoiding bias in training data require careful attention during development and deployment.

Quantum Sensing and Fundamental Physical Limits

Quantum technologies promise to push detection capabilities toward fundamental physical limits imposed by quantum mechanics itself. Quantum sensors exploit phenomena like entanglement and superposition to achieve sensitivities exceeding classical approaches.

Atomic magnetometers detect magnetic field variations billions of times weaker than Earth’s magnetic field, enabling applications from brain imaging to mineral exploration. Quantum gravimeters measure minute variations in gravitational acceleration, useful for infrastructure inspection and geological surveying. As these technologies mature, they’ll redefine what’s considered detectable.

Navigating the Challenge of False Positives and Negatives ⚖️

Optimizing detection thresholds invariably involves balancing competing error types. Understanding this trade-off proves essential for implementing effective detection systems across all domains.

False positives occur when systems incorrectly identify noise as signal. In security screening, false alarms waste resources and create complacency. In medical testing, they cause unnecessary anxiety and potentially harmful follow-up procedures. Reducing false positives typically requires raising detection thresholds or improving specificity through additional confirmation steps.

False negatives represent missed detections—genuine signals incorrectly classified as noise. The consequences vary dramatically by application. A missed tumor detection might prove fatal, while an undetected network intrusion could compromise sensitive data. Minimizing false negatives drives thresholds lower, but this inevitably increases false positive rates.

Receiver Operating Characteristic (ROC) curves provide valuable tools for visualizing and optimizing this trade-off. By plotting true positive rate against false positive rate across various threshold settings, engineers identify optimal operating points balancing competing priorities. The area under the ROC curve quantifies overall detection system performance independent of any specific threshold choice.

🚀 Future Directions and Emerging Paradigms

The field of detection threshold optimization continues evolving rapidly, with several promising directions poised to deliver transformative capabilities in coming years.

Adaptive and Context-Aware Detection Systems

Future systems will increasingly adjust their detection thresholds dynamically based on operational context and accumulated experience. Rather than applying fixed thresholds, these adaptive systems will learn optimal settings for different conditions, automatically reconfiguring themselves as circumstances change.

Contextual information from multiple sources will inform threshold decisions. A health monitor might lower detection thresholds when other indicators suggest developing illness. Security systems could adjust sensitivity based on threat level assessments or time-sensitive patterns. This contextual awareness enables optimization impossible with static configurations.

Distributed and Collaborative Detection Networks

Individual sensors operate within sensitivity constraints, but networked systems aggregate information across multiple detection points, achieving collective capabilities exceeding any single node. Distributed detection networks compensate for individual sensor limitations through spatial diversity and information fusion.

These collaborative approaches enable detection of phenomena invisible to isolated sensors. Seismic monitoring networks triangulate earthquake locations and magnitudes from distributed measurements. Environmental sensor arrays map pollution plumes across regions. As communication technologies improve and deployment costs decrease, such networks will become increasingly prevalent.

Ethical Considerations and Responsible Implementation

As detection capabilities advance, ethical implications demand careful consideration. Surveillance technologies raise privacy concerns when detection thresholds enable identification or tracking of individuals in public spaces. Medical screening programs must balance early detection benefits against potential harms from overdiagnosis and unnecessary treatment.

Transparency about detection system capabilities and limitations becomes increasingly important. Users deserve understanding of when systems might produce errors and how decisions get made. Regulatory frameworks must evolve alongside technological capabilities to ensure responsible deployment that respects individual rights while capturing societal benefits.

💡 Practical Strategies for Optimizing Detection Thresholds

Organizations implementing or improving detection systems can apply several evidence-based strategies to maximize performance while managing constraints effectively.

Begin with comprehensive characterization of both signals of interest and expected noise sources. Detailed understanding of statistical properties informs realistic performance expectations and guides design choices. Collect representative data across anticipated operating conditions rather than relying solely on idealized laboratory measurements.

Implement robust validation procedures using independent test datasets. Systems optimized on training data often show degraded performance when deployed in real environments. Cross-validation techniques help identify overfitting and ensure generalizability across diverse conditions.

Consider the full decision pipeline rather than optimizing detection thresholds in isolation. Preprocessing steps like filtering and normalization significantly impact effective detection limits. Post-detection confirmation procedures can reduce false positive rates without sacrificing sensitivity. Holistic system optimization often outperforms component-level tuning.

Establish clear performance metrics aligned with application requirements. Different contexts prioritize different aspects of detection performance. Define success criteria explicitly—whether minimizing worst-case error rates, optimizing average performance, or achieving specific sensitivity levels—and design accordingly.

Transforming Potential into Practical Solutions 🎖️

The journey from theoretical detection limits to deployed systems delivering real value requires bridging multiple gaps between possibility and practicality. Cost constraints, regulatory requirements, user acceptance, and operational realities all influence successful implementation.

Iterative development approaches prove particularly effective for detection systems. Initial deployments with conservative thresholds establish baseline performance and build user confidence. Subsequent refinements gradually improve capabilities based on accumulated operational experience. This evolutionary approach reduces risk while enabling continuous improvement.

User training and interface design significantly impact effectiveness regardless of underlying technical sophistication. Systems must present detection results clearly, providing appropriate context for interpretation. Overwhelming users with raw detections creates cognitive overload, while oversimplified presentations may obscure important nuances. Striking the right balance requires human factors expertise alongside technical development.

Detection threshold optimization represents an ongoing journey rather than a destination. As applications evolve, environments change, and technologies advance, continuous reassessment ensures systems remain optimally configured. Organizations that embrace this mindset of perpetual refinement position themselves to extract maximum value from detection capabilities.

The exploration of detection threshold constraints continues opening new frontiers across virtually every technological domain. By pushing boundaries while respecting fundamental limits, scientists and engineers create smarter solutions that enhance precision, enable earlier intervention, and unlock previously impossible applications. As detection capabilities advance, they amplify human ability to perceive, understand, and respond to our complex world with unprecedented sensitivity and insight.

Toni Santos is a health systems analyst and methodological researcher specializing in the study of diagnostic precision, evidence synthesis protocols, and the structural delays embedded in public health infrastructure. Through an interdisciplinary and data-focused lens, Toni investigates how scientific evidence is measured, interpreted, and translated into policy — across institutions, funding cycles, and consensus-building processes. His work is grounded in a fascination with measurement not only as technical capacity, but as carriers of hidden assumptions. From unvalidated diagnostic thresholds to consensus gaps and resource allocation bias, Toni uncovers the structural and systemic barriers through which evidence struggles to influence health outcomes at scale. With a background in epidemiological methods and health policy analysis, Toni blends quantitative critique with institutional research to reveal how uncertainty is managed, consensus is delayed, and funding priorities encode scientific direction. As the creative mind behind Trivexono, Toni curates methodological analyses, evidence synthesis critiques, and policy interpretations that illuminate the systemic tensions between research production, medical agreement, and public health implementation. His work is a tribute to: The invisible constraints of Measurement Limitations in Diagnostics The slow mechanisms of Medical Consensus Formation and Delay The structural inertia of Public Health Adoption Delays The directional influence of Research Funding Patterns and Priorities Whether you're a health researcher, policy analyst, or curious observer of how science becomes practice, Toni invites you to explore the hidden mechanisms of evidence translation — one study, one guideline, one decision at a time.