Instrument sensitivity defines the boundary between measurable signals and noise, fundamentally shaping our ability to capture precise data across scientific, industrial, and medical applications.

🔬 Understanding the Core Challenge of Sensitivity Limitations

Every measurement instrument operates within defined sensitivity thresholds that determine the smallest detectable change in a physical quantity. These constraints represent more than technical specifications—they embody the fundamental limits of what we can observe, measure, and understand about our world. When instruments lack adequate sensitivity, critical information disappears into background noise, making accurate decision-making impossible.

The impact of sensitivity constraints extends across diverse fields. In clinical diagnostics, insufficient sensitivity may fail to detect early-stage diseases when treatment would be most effective. In environmental monitoring, subtle pollutant concentrations might escape detection until reaching dangerous levels. Manufacturing quality control depends on instruments capable of identifying microscopic defects before they compromise product integrity.

Modern technology demands unprecedented precision. As processes become more refined and tolerances tighter, the gap between required and available sensitivity widens. This challenge drives continuous innovation in measurement science, pushing researchers and engineers to develop novel approaches for extracting meaningful signals from increasingly complex data environments.

📊 The Technical Foundations of Instrument Sensitivity

Sensitivity refers to an instrument’s ability to respond to small changes in the measured parameter. This characteristic differs from accuracy, which measures how close a reading comes to the true value, and precision, which indicates reproducibility of measurements. While these concepts interrelate, sensitivity specifically addresses detection thresholds.

Multiple factors influence instrument sensitivity. Detector quality represents the first consideration—photodetectors, sensors, and transducers must convert physical phenomena into electrical signals with minimal loss. Signal amplification stages introduce their own noise characteristics while boosting weak signals to measurable levels. Electronic components generate thermal noise that establishes baseline detection limits.

Environmental conditions profoundly affect sensitivity. Temperature fluctuations alter component behavior, introducing drift and instability. Electromagnetic interference corrupts signals, particularly in high-sensitivity applications. Mechanical vibrations disturb delicate measurements. These external factors often prove more limiting than inherent instrument capabilities.

⚡ Signal-to-Noise Ratio: The Critical Metric

The signal-to-noise ratio (SNR) quantifies instrument effectiveness at distinguishing true signals from background interference. High SNR enables detection of weak phenomena, while poor SNR obscures valuable information. Improving SNR represents the primary strategy for enhancing sensitivity without redesigning entire measurement systems.

Various noise sources contribute to overall system noise. Johnson-Nyquist noise arises from thermal agitation of charge carriers in resistive components. Shot noise results from the discrete nature of electrical charge. Flicker noise, or 1/f noise, dominates at low frequencies with poorly understood mechanisms. External interference adds additional complexity through electromagnetic coupling and ground loops.

🛠️ Proven Strategies for Overcoming Sensitivity Barriers

Advancing beyond inherent sensitivity limitations requires systematic approaches that address both instrument design and measurement methodology. Engineers and scientists have developed numerous techniques, each applicable to specific situations and measurement challenges.

Signal Averaging and Integration Techniques

Repeated measurements followed by averaging exploit the statistical nature of random noise. Since noise fluctuates unpredictably while true signals remain consistent, averaging multiple observations reduces noise proportionally to the square root of measurement count. This simple yet powerful technique requires only time and computational resources.

Lock-in amplification takes signal averaging to sophisticated levels by modulating the measured signal at a specific reference frequency, then using phase-sensitive detection to extract only signal components at that frequency. This approach achieves remarkable noise rejection, enabling detection of signals buried hundreds or thousands of times below noise levels.

Boxcar averaging applies to repetitive transient signals, sampling at precise intervals synchronized to signal occurrence. By accumulating measurements from multiple cycles, this technique reveals signal characteristics otherwise lost in noise, proving invaluable for spectroscopy and pulsed measurement systems.

🎯 Optimizing Detector Technology

Modern detector advances continually push sensitivity boundaries. Photomultiplier tubes achieve single-photon detection through cascaded electron multiplication. Avalanche photodiodes provide similar capabilities in compact solid-state packages. Charge-coupled devices (CCDs) and complementary metal-oxide-semiconductor (CMOS) sensors accumulate photons over extended periods, revealing extremely faint optical signals.

Cryogenic cooling reduces thermal noise by operating detectors at liquid nitrogen or helium temperatures. While adding complexity and cost, this approach proves essential for applications demanding ultimate sensitivity, including astronomical observation, infrared spectroscopy, and quantum computing.

Emerging technologies promise further improvements. Superconducting detectors operate at temperatures near absolute zero, achieving quantum-limited sensitivity. Metamaterials engineered at nanoscale dimensions enhance light-matter interactions, boosting detector response. Quantum sensing leverages entanglement and superposition for measurements approaching theoretical limits.

🔧 Environmental Control and Isolation Methods

Controlling measurement environment often yields greater sensitivity improvements than upgrading instrumentation. Systematic environmental management addresses noise sources at their origin rather than attempting to filter interference after corruption occurs.

Vibration isolation employs various strategies depending on frequency ranges. Pneumatic isolation tables float on compressed air cushions, absorbing low-frequency building vibrations. Active isolation systems use sensors and actuators to cancel disturbances in real-time. Simple elastomeric mounts provide cost-effective high-frequency isolation.

Electromagnetic shielding blocks external interference while preventing instrument emissions. Faraday cages constructed from conductive materials intercept electromagnetic waves. Mu-metal shields redirect magnetic fields around sensitive components. Careful grounding practices eliminate ground loops that couple noise between interconnected equipment.

Temperature stabilization maintains consistent instrument performance. Precision ovens hold critical components at constant elevated temperatures, eliminating thermal drift. Thermoelectric coolers actively regulate temperatures with fast response. Simple thermal mass and insulation provide passive stabilization for less demanding applications.

🌡️ Creating Optimal Measurement Conditions

Beyond basic environmental control, optimized measurement protocols maximize effective sensitivity. Baseline correction removes systematic offsets that consume dynamic range. Background subtraction eliminates static interference patterns. Chopping techniques modulate measured signals, shifting them away from low-frequency noise concentrations.

Sample preparation significantly influences measurement quality. Proper surface treatment reduces scattering and reflection losses in optical measurements. Clean handling procedures prevent contamination that introduces spurious signals. Appropriate sample geometry optimizes signal collection efficiency.

📈 Digital Signal Processing for Enhanced Sensitivity

Computational approaches extract maximum information from acquired data, effectively increasing sensitivity without hardware modifications. Digital signal processing has revolutionized measurement science, making previously impossible measurements routine.

Digital filtering removes noise outside signal frequency ranges. Low-pass filters eliminate high-frequency interference. High-pass filters suppress drift and baseline fluctuations. Band-pass filters isolate signals within specific frequency windows. Adaptive filters automatically adjust characteristics based on signal properties, providing optimal performance across varying conditions.

Fourier analysis decomposes complex signals into frequency components, revealing periodic structures hidden in time-domain data. Fast Fourier Transform algorithms enable real-time frequency analysis of continuous data streams. Windowing functions minimize spectral leakage while maintaining frequency resolution.

🤖 Machine Learning Applications in Signal Enhancement

Artificial intelligence brings new capabilities to sensitivity enhancement. Neural networks trained on clean signals learn to recognize signal characteristics even when severely degraded by noise. This approach proves particularly effective for complex signals lacking simple mathematical descriptions.

Deep learning models automatically discover optimal signal processing strategies from training data, often surpassing hand-crafted algorithms. Convolutional neural networks excel at image denoising and feature extraction. Recurrent networks handle temporal sequences, predicting signal continuation from noisy observations.

Anomaly detection algorithms identify unusual events within noisy data streams, essentially functioning as ultra-sensitive change detectors. These methods find applications in predictive maintenance, quality control, and security monitoring where rare events carry critical importance.

🎓 Industry-Specific Sensitivity Solutions

Different fields face unique sensitivity challenges requiring specialized approaches. Understanding industry-specific constraints and solutions provides practical guidance for implementation.

Medical Diagnostics and Biosensing

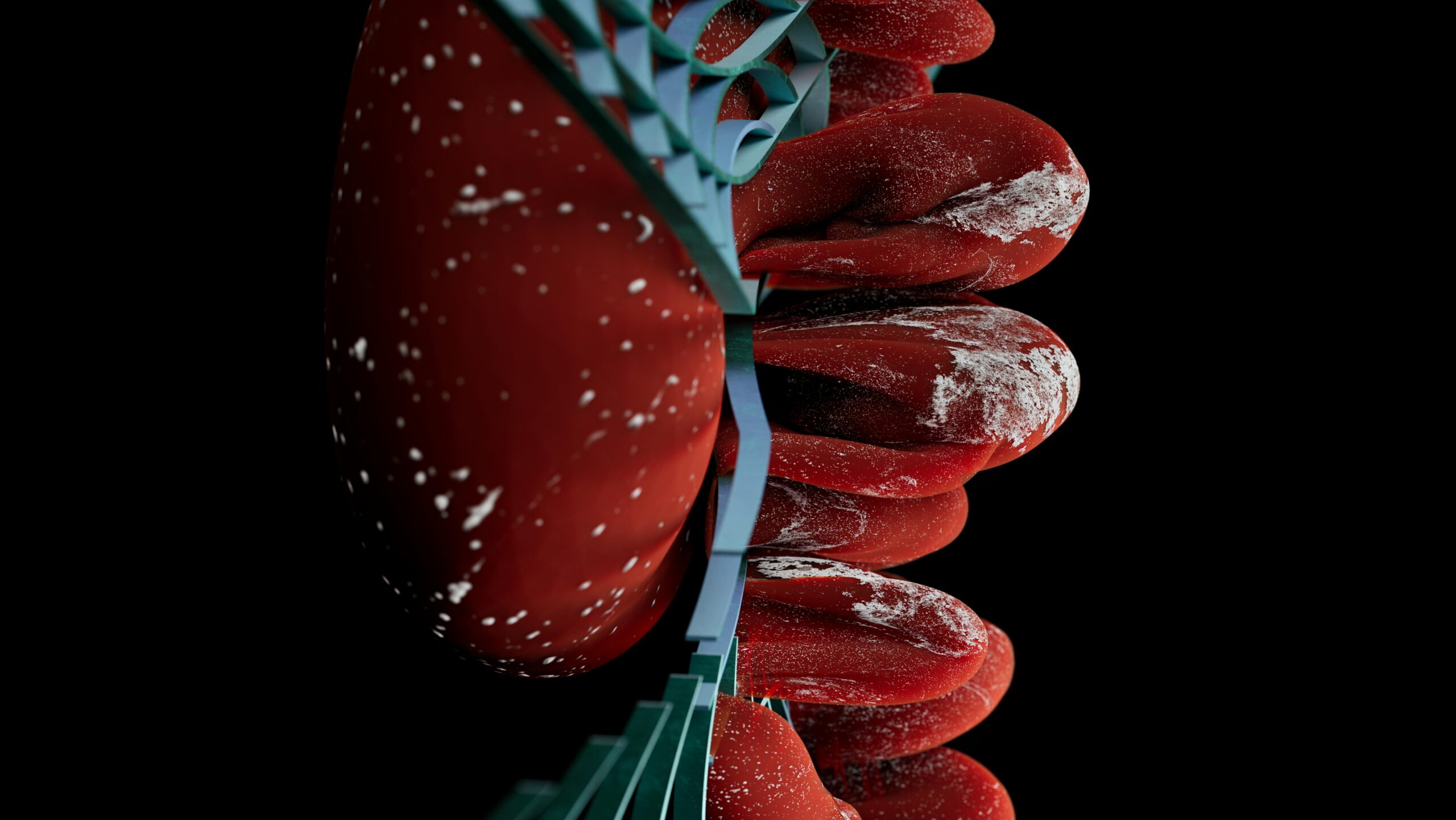

Clinical applications demand detection of minute biomarker concentrations indicating disease presence. Enzyme-linked immunosorbent assays (ELISA) amplify molecular recognition events through enzymatic signal generation. Polymerase chain reaction (PCR) exponentially multiplies genetic material, enabling detection of single DNA molecules.

Surface-enhanced Raman spectroscopy (SERS) increases light scattering cross-sections by factors exceeding one million through plasmonic enhancement. This technique enables label-free molecular identification at extremely low concentrations. Electrochemical biosensors achieve femtomolar detection limits by measuring electrical current from redox reactions occurring at functionalized electrodes.

🏭 Industrial Process Monitoring

Manufacturing quality depends on detecting subtle deviations from specifications before defects accumulate. Statistical process control combined with high-sensitivity measurements enables early intervention. Inline sensors continuously monitor critical parameters, triggering alerts when trends indicate impending specification violations.

Non-destructive testing reveals internal defects without damaging products. Ultrasonic testing detects microscopic cracks and inclusions. Eddy current methods identify subsurface flaws in conductive materials. X-ray computed tomography provides three-dimensional defect visualization. Each technique requires careful sensitivity optimization for specific materials and defect types.

🌍 Environmental Monitoring Applications

Environmental protection requires detecting pollutants at concentrations well below harmful levels. Gas chromatography-mass spectrometry (GC-MS) separates chemical mixtures then identifies components with part-per-trillion sensitivity. Inductively coupled plasma mass spectrometry (ICP-MS) measures trace metal concentrations in water and soil samples.

Remote sensing instruments aboard satellites monitor atmospheric composition, ocean temperatures, and vegetation health across global scales. These systems must achieve exceptional sensitivity while operating in harsh space environments without maintenance. Hyperspectral imaging captures hundreds of spectral bands, revealing subtle changes invisible to conventional cameras.

💡 Emerging Technologies Pushing Sensitivity Frontiers

Research laboratories continuously develop next-generation measurement technologies that will define future capabilities. Understanding emerging trends helps organizations prepare for coming advances.

Quantum sensors exploit quantum mechanical phenomena for unprecedented sensitivity. Nitrogen-vacancy centers in diamond enable magnetic field measurements with nanometer spatial resolution. Atomic interferometers measure acceleration and rotation with precision surpassing mechanical gyroscopes. Squeezed light reduces quantum noise below standard quantum limits in optical measurements.

Nanophotonic devices manipulate light at subwavelength scales, enhancing light-matter interactions. Photonic crystal cavities confine light to tiny volumes with long lifetimes, amplifying weak optical signals. Plasmonic antennas concentrate electromagnetic fields into nanoscale hotspots, enabling single-molecule detection.

Neuromorphic sensing mimics biological sensory systems, achieving efficiency and sensitivity beyond conventional approaches. Event-driven vision sensors report only pixel changes, reducing data volume while capturing rapid phenomena. Spiking neural networks process sensor data with minimal power consumption, enabling autonomous operation.

🚀 Implementing Sensitivity Improvements: Practical Roadmap

Organizations seeking enhanced measurement capabilities should follow systematic approaches ensuring successful implementation while managing costs and risks.

Initial assessment identifies current limitations and improvement opportunities. Measurement system analysis quantifies existing performance through gage repeatability and reproducibility studies. Uncertainty budgets allocate total measurement error among contributing factors, revealing which improvements yield greatest impact. Stakeholder interviews clarify performance requirements and acceptable trade-offs.

Prioritization balances sensitivity improvements against costs, timeline, and technical risks. Quick wins providing immediate benefits with minimal investment build momentum. Strategic investments address fundamental limitations requiring substantial resources but enabling transformative capabilities. Proof-of-concept demonstrations validate approaches before full-scale implementation.

📋 Validation and Continuous Improvement

Rigorous validation ensures enhanced systems meet requirements. Calibration establishes traceability to recognized standards. Linearity testing verifies response across measurement ranges. Detection limit studies determine minimum reliably measurable quantities. Interference testing confirms immunity to environmental factors.

Continuous monitoring maintains performance over time. Control charts track key metrics, revealing drift requiring correction. Periodic recalibration compensates for component aging. Preventive maintenance addresses potential failures before affecting measurements. Regular training keeps operators current with best practices.

🎯 Achieving Measurement Excellence Through Sensitivity Optimization

Superior measurement capability emerges from thoughtfully combining multiple sensitivity enhancement strategies. No single approach solves all challenges—effective solutions integrate hardware improvements, environmental control, signal processing, and operational procedures into cohesive systems.

Success requires understanding fundamental sensitivity limitations, available enhancement techniques, and specific application requirements. Organizations must balance competing priorities including sensitivity, speed, cost, complexity, and robustness. Strategic investments in measurement infrastructure deliver competitive advantages through improved product quality, faster development cycles, and better informed decision-making.

The pursuit of enhanced sensitivity drives innovation across scientific instruments, industrial sensors, and consumer devices. As technology advances, previously impossible measurements become routine, opening new research directions and enabling novel applications. Organizations embracing sensitivity optimization position themselves at the forefront of their fields, equipped to tackle tomorrow’s measurement challenges with today’s preparation.

Measurement science continues evolving rapidly, with quantum technologies, artificial intelligence, and nanoscale engineering promising revolutionary capabilities. By establishing robust foundations today and remaining engaged with emerging developments, forward-thinking organizations prepare to leverage these advances as they mature, maintaining measurement excellence throughout their journey toward ultimate precision.

Toni Santos is a health systems analyst and methodological researcher specializing in the study of diagnostic precision, evidence synthesis protocols, and the structural delays embedded in public health infrastructure. Through an interdisciplinary and data-focused lens, Toni investigates how scientific evidence is measured, interpreted, and translated into policy — across institutions, funding cycles, and consensus-building processes. His work is grounded in a fascination with measurement not only as technical capacity, but as carriers of hidden assumptions. From unvalidated diagnostic thresholds to consensus gaps and resource allocation bias, Toni uncovers the structural and systemic barriers through which evidence struggles to influence health outcomes at scale. With a background in epidemiological methods and health policy analysis, Toni blends quantitative critique with institutional research to reveal how uncertainty is managed, consensus is delayed, and funding priorities encode scientific direction. As the creative mind behind Trivexono, Toni curates methodological analyses, evidence synthesis critiques, and policy interpretations that illuminate the systemic tensions between research production, medical agreement, and public health implementation. His work is a tribute to: The invisible constraints of Measurement Limitations in Diagnostics The slow mechanisms of Medical Consensus Formation and Delay The structural inertia of Public Health Adoption Delays The directional influence of Research Funding Patterns and Priorities Whether you're a health researcher, policy analyst, or curious observer of how science becomes practice, Toni invites you to explore the hidden mechanisms of evidence translation — one study, one guideline, one decision at a time.